A cautionary view on AI

Welcome to the fourteenth edition of ‘3-2-1 by Story Rules‘.

A newsletter recommending good examples of storytelling across:

• 3 tweets

• 2 articles, and

• 1 long-form content piece

Each would be accompanied by my short summary/take and sometimes with an insightful extract.

Let’s dive in.

🐦 3 Tweets of the week

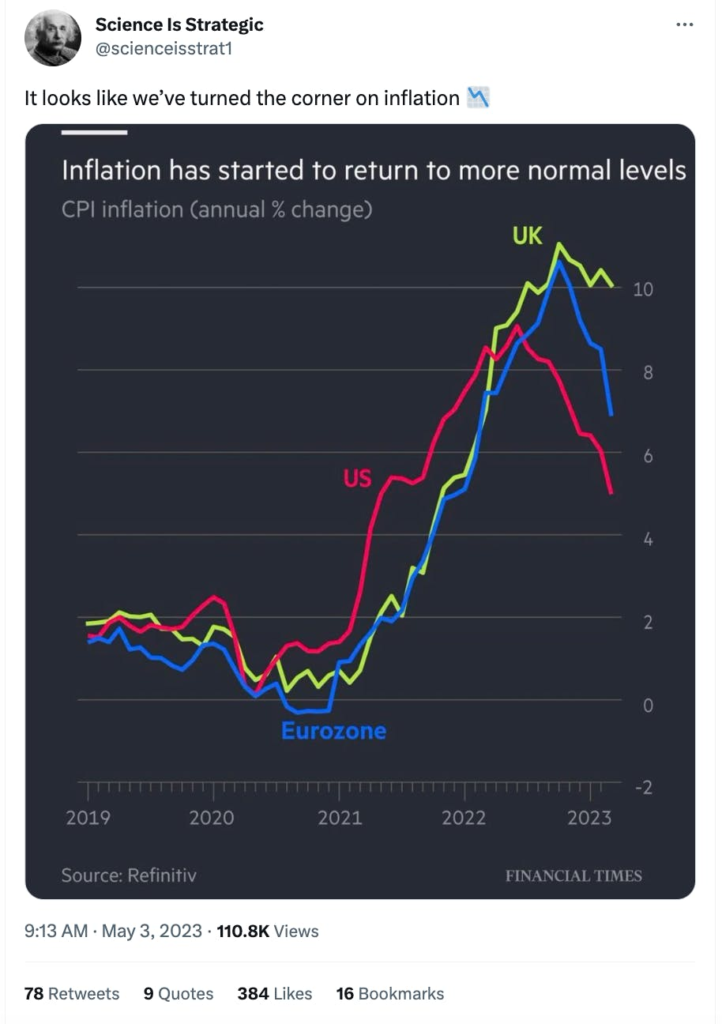

Some welcome good news on inflation in the West.

Good use of a Stacked Column chart to make a striking point.

A hilarious set of outdoor ads by an oat-milk company – check out the entire set.

📄 2 Articles of the week

Historian and peerless storyteller Yuval Noah Harari argues for curbs on AI.

Some extracts (emphasis mine):

In recent years the qAnon cult has coalesced around anonymous online messages, known as “q drops”. Followers collected, revered and interpreted these q drops as a sacred text. While to the best of our knowledge all previous q drops were composed by humans, and bots merely helped disseminate them, in future we might see the first cults in history whose revered texts were written by a non-human intelligence. Religions throughout history have claimed a non-human source for their holy books. Soon that might be a reality.

—

We can still regulate the new AI tools, but we must act quickly. Whereas nukes cannot invent more powerful nukes, AI can make exponentially more powerful AI. The first crucial step is to demand rigorous safety checks before powerful AI tools are released into the public domain. Just as a pharmaceutical company cannot release new drugs before testing both their short-term and long-term side-effects, so tech companies shouldn’t release new AI tools before they are made safe. We need an equivalent of the Food and Drug Administration for new technology, and we need it yesterday.

b. Geoffrey Hinton tells us why he’s now scared of the tech he helped build (MIT Technology Review)

Ex-Google AI scientist Geoffrey Hinton has been in the news recently after he quit the company and gave a bunch of interviews sharing his concerns about AI.

“Our brains have 100 trillion connections,” says Hinton. “Large language models have up to half a trillion, a trillion at most. Yet GPT-4 knows hundreds of times more than any one person does. So maybe it’s actually got a much better learning algorithm than us.”

—

(On AI coming up with bullshit answers):

Hinton has an answer for that too: bullshitting is a feature, not a bug. “People always confabulate,” he says. Half-truths and misremembered details are hallmarks of human conversation: “Confabulation is a signature of human memory. These models are doing something just like people.”

The difference is that humans usually confabulate more or less correctly, says Hinton. To Hinton, making stuff up isn’t the problem. Computers just need a bit more practice.

Hinton is not too optimistic of lawmakers taking any substantive action:

Bengio agrees with Hinton that these issues need to be addressed at a societal level as soon as possible. But he says the development of AI is accelerating faster than societies can keep up. The capabilities of this tech leap forward every few months; legislation, regulation, and international treaties take years.

This makes Bengio wonder whether the way our societies are currently organized—at both national and global levels—is up to the challenge. “I believe that we should be open to the possibility of fairly different models for the social organization of our planet,” he says.

Does Hinton really think he can get enough people in power to share his concerns? He doesn’t know. A few weeks ago, he watched the movie Don’t Look Up, in which an asteroid zips toward Earth, nobody can agree what to do about it, and everyone dies—an allegory for how the world is failing to address climate change.

“I think it’s like that with AI,” he says, and with other big intractable problems as well. “The US can’t even agree to keep assault rifles out of the hands of teenage boys,” he says.

Hat/Tip: Prahlad Viswanathan

📖 1 long-form read of the week

a. ‘Quantum computing could break the internet. This is how’ by the Financial Times

This is a fabulous visual explainer of the next big thing in technology – quantum computing. I mean I still don’t get a lot of it, but this is the most accessible and engaging content I’ve encountered on this topic.

They call it Q-day. That is the day when a robust quantum computer, like this one, will be able to crack the most common encryption method used to secure our digital data.

—

As well as being enticed by the economic possibilities, governments are concerned about the security implications of developing quantum computers. At present, the most common method used to secure all our digital data relies on the RSA algorithm, which is vulnerable to being cracked by a quantum machine.

Image by Gerd Altmann from Pixabay