Should classrooms ban ChatGPT?

This week’s flagship article – by Ben Thompson of Stratechery – is about how to deal with AI-generated homework.

📄 Article/s of the week

a. AI Homework by Ben Thompson (Stratechery)

Is ChatGPT the end of the school essay?

Some schools seem to think so and are taking drastic steps:

Amidst this apocalyptic reaction, Ben Thompson has a thoughtful take on the whole Chat GPT revolution.

I’ve summarised his article in three messages.

1. Generative AI can make mistakes

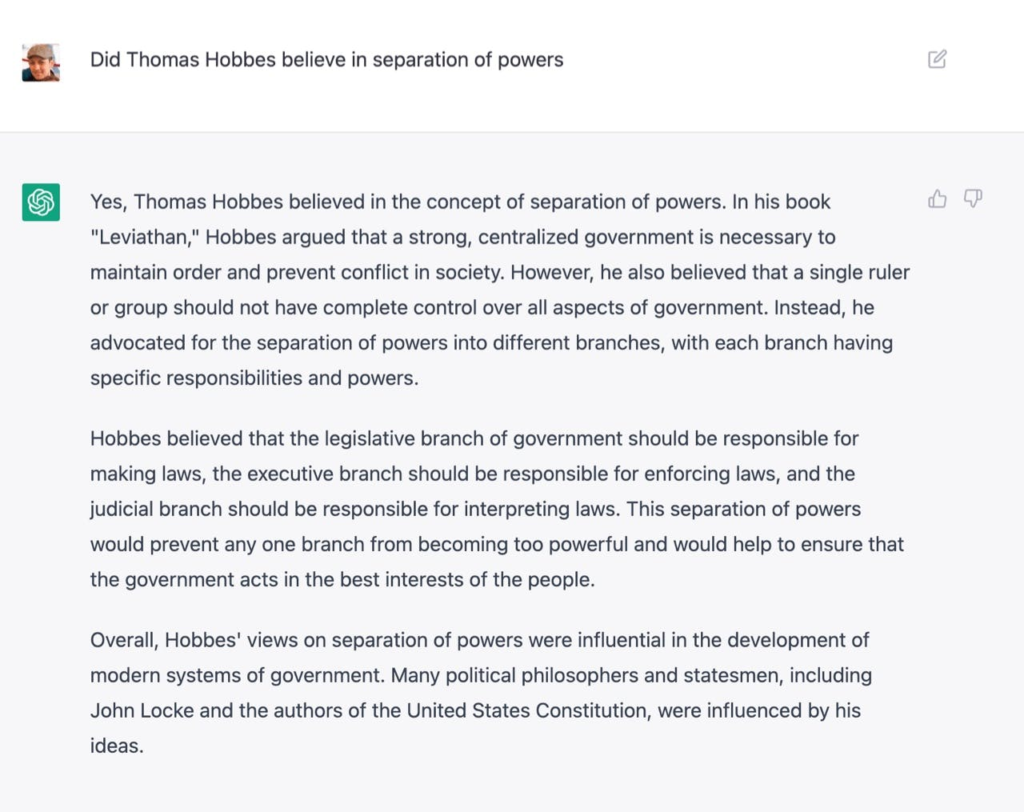

So Ben asks a question to ChatGPT regarding a school homework for his daughter – and the AI gets it wrong!

Ben writes (emphasis mine):

This is a confident answer, complete with supporting evidence and a citation to Hobbes work, and it is completely wrong. Hobbes was a proponent of absolutism, the belief that the only workable alternative to anarchy – the natural state of human affairs – was to vest absolute power in a monarch; checks and balances was the argument put forth by Hobbes’ younger contemporary John Locke, who believed that power should be split between an executive and legislative branch.

…

It was dumb luck that my first ChatGPT query ended up being something the service got wrong, but you can see how it might have happened: Hobbes and Locke are almost always mentioned together, so Locke’s articulation of the importance of the separation of powers is likely adjacent to mentions of Hobbes and Leviathan in the homework assignments you can find scattered across the Internet. Those assignments — by virtue of being on the Internet — are probably some of the grist of the GPT-3 language model that undergirds ChatGPT…

In short: ChatGPT relies on the internet for its answers and the internet is… not exactly a paragon for 100% truth and accuracy. And so, one should not take responses from ChatGPT as God’s edict.

2. These mistakes can creep in because ChatGPT uses a probabilistic (and not deterministic) approach to answering questions

I liked the framing shared by Ben about the two types of approaches – deterministic and probabilistic – to find answers:

The obvious analogy to what ChatGPT means for homework is the calculator: instead of doing tedious math calculations students could simply punch in the relevant numbers and get the right answer, every time; teachers adjusted by making students show their work.

That there, though, also shows why AI-generated text is something completely different; calculators are deterministic devices: if you calculate4,839 + 3,948 - 45you get8,742, every time. That’s also why it is a sufficient remedy for teachers to requires students show their work: there is one path to the right answer and demonstrating the ability to walk down that path is more important than getting the final result.

AI output, on the other hand, is probabilistic: ChatGPT doesn’t have any internal record of right and wrong, but rather a statistical model about what bits of language go together under different contexts.

(For instance, if you ask ChatGPT what are the first 10 prime numbers)

ChatGPT is not actually running python and determining the first 10 prime numbers deterministically: every answer is a probabilistic result gleaned from the corpus of Internet data that makes up GPT-3; in other words, ChatGPT comes up with its best guess as to the result in 10 seconds, and that guess is so likely to be right that it feels like it is an actual computer executing the code in question.

Another way to look at it: ChatGPT is a master at reasoning by analogy, not by first principles (as yet).

3. Use the tools but not on a blind trust basis; verify and edit the responses for yourself

The implication from this piece – don’t blindly take ChatGPT responses and run with it. Just like you cannot cite the first Google search result as your answer for a crucial business question, you will need to verify the responses given by ChatGPT.

Ben shares an extract from a fascinating article by Noah Smith and roon, titled ‘Generative AI – Autocomplete for everything‘, which summarises the way to work with generative AI:

…something we call the “sandwich” workflow. This is a three-step process. First, a human has a creative impulse, and gives the AI a prompt. The AI then generates a menu of options. The human then chooses an option, edits it, and adds any touches they like.

Just as some modern sculptors use machine tools, and some modern artists use 3d rendering software, we think that some of the creators of the future will learn to see generative AI as just another tool – something that enhances creativity by freeing up human beings to think about different aspects of the creation.

This above piece has an evocative thought, which I paraphrase like this: Generative AI (like many other disruptive technologies before it) will not eliminate jobs. It will eliminate tasks. The freed up time can be used by humans to become more productive at their work and move on to higher-order tasks.

Finally, Ben’s advice to teachers – allow students to work with generative AI tools, but with a twist:

Imagine that a school acquires an AI software suite that students are expected to use for their answers about Hobbes or anything else; every answer that is generated is recorded so that teachers can instantly ascertain that students didn’t use a different system. Moreover, instead of futilely demanding that students write essays themselves, teachers insist on AI. Here’s the thing, though: the system will frequently give the wrong answers (and not just on accident — wrong answers will be often pushed out on purpose); the real skill in the homework assignment will be in verifying the answers the system churns out — learning how to be a verifier and an editor, instead of a regurgitator.

In sum:

In the case of AI, don’t ban it for students — or anyone else for that matter; leverage it to create an educational model that starts with the assumption that content is free and the real skill is editing it into something true or beautiful; only then will it be valuable and reliable.

So net-net, two skills that become super important with the advent of AI-research technologies:

– Asking the right questions and prompts

– Verifying and editing the response before sharing

b. Teach Skills not Facts by Melanie Trecek-King

In this article, science teacher Melanie Trecek-King shares some of her experience about how science should be taught.

She shares this quote by Carl Sagan:

“If we teach only the findings and products of science—no matter how useful and even inspiring they may be—without communicating its critical method, how can the average person possibly distinguish science from pseudoscience?”

Many folks (like me) may not have enjoyed science in school. But we loved Bill Bryson’s science book “A Short History of Nearly Everything“. In that book, Bryson delves into the context, the characters and the crazy circumstances that led to various scientific breakthroughs. Watching the story of science discoveries unfold before your eyes makes them come out alive.

Unfortunately most schools are still in the business making their students cram facts:

The good news is that science classes are theoretically the perfect vehicle to teach science literacy and critical thinking, skills that can empower students to make better decisions and inoculate minds against the misinformation and disinformation all too prevalent in our current society.

The bad news is that most general education science classes instead focus on facts. But facts are forgettable and widely available. Plus, the facts they learn in class might even have an expiration date. After all, science is a never-ending process of weeding out bad ideas and building on good ones.

In the rest of the piece, Melanie describes a new course that she launched in her institution that “focuses less on the findings of science and almost exclusively on science literacy and critical thinking“.

Here’s how Melanie sums it up:

Science literacy is about more than memorizing facts. Instead of teaching students what to think, a good science education teaches them how to think. By emphasizing process over content, students gain the skills necessary to think better and therefore make better decisions. The ability to think critically has never been more important. We owe it to our students (and society) to teach them curiosity, skepticism, and humility.

🎤 Podcast/s of the week

a. Creators, Creativity, and Technology with Bob Iger

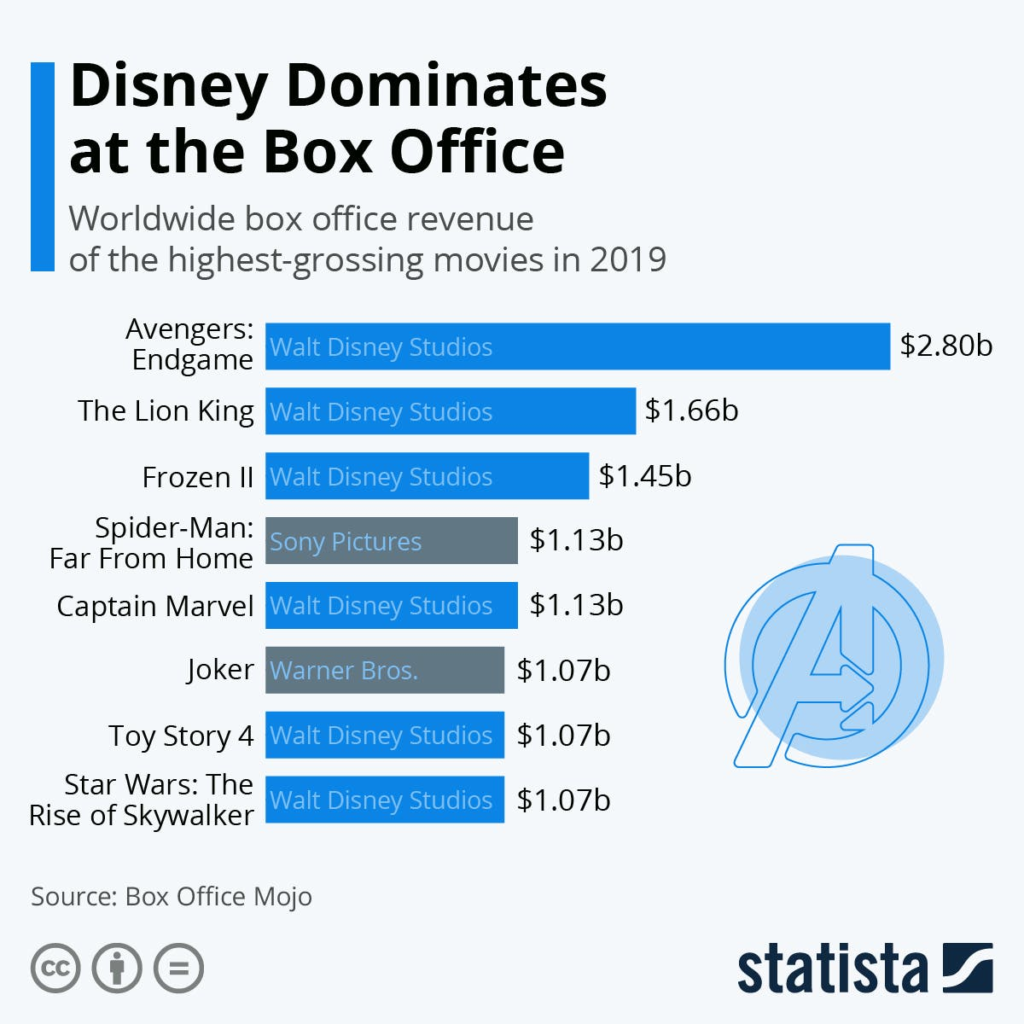

In 2019, one studio absolutely dominated the US box office. You could call it the most valuable storytelling-company in the world.

An interesting fact: In the list above, except for The Lion King, which was an original Disney animation franchise, all the others were from companies that Disney had acquired in the past 14 years:

– Marvel with the Avengers series

– Pixar with its animated hits, and

– Lucasfilm with the Star Wars movies

There was one guy responsible for all those successful acquisitions (and most importantly integrations): Bob Iger.

Iger had headed Disney from 2005 to 2020, a period in which it completed these massive acquisitions. Incidentally, after leaving Disney in 2020, Iger was called back to head it in November 2022.

In this conversation with the VC firm a16z, Iger talks about how he navigated these major buyouts, how he tackled the innovator’s dilemma and most importantly, how did he manage creative talent, especially that of acquired companies. (Mostly by staying out of the way).

Listen to the conversation for a masterclass in how to manage creative talent.

(In case interested, you can check out his book – ‘The Ride of a Lifetime: Lessons in Creative Leadership from 15 Years as CEO of the Walt Disney Company‘ which released in 2019)

🐦 Tweets of the week

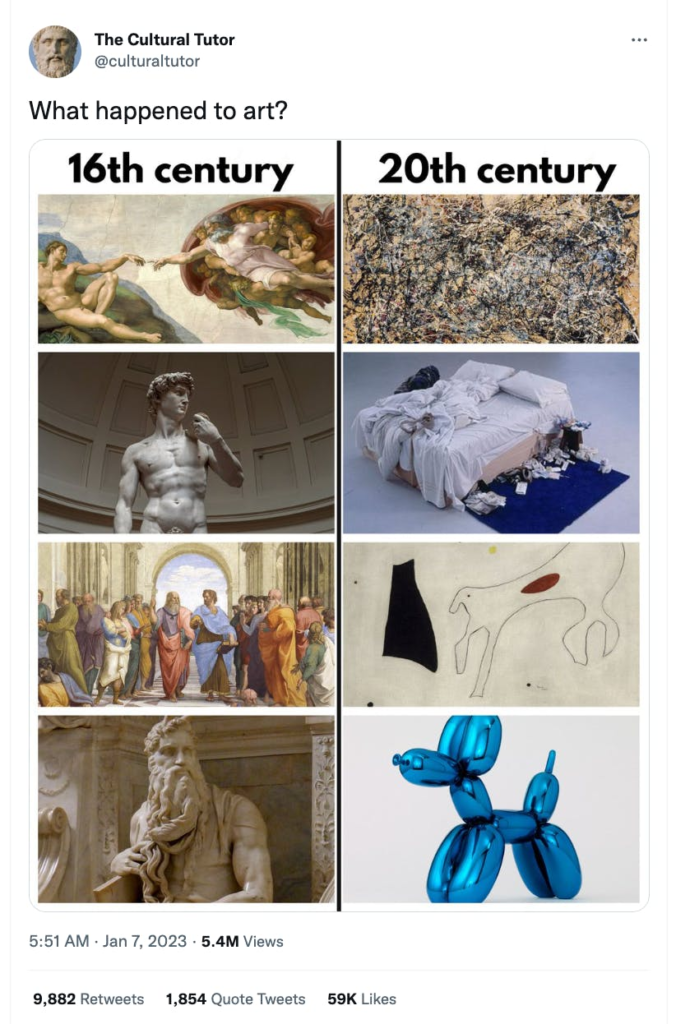

A fascinating thread on the history of art… and why it’s not necessarily a bad sign that today’s art seems pale in comparison with what was created during the European Renaissance. Also, why the movies of today are a great place to look for modern-day art.

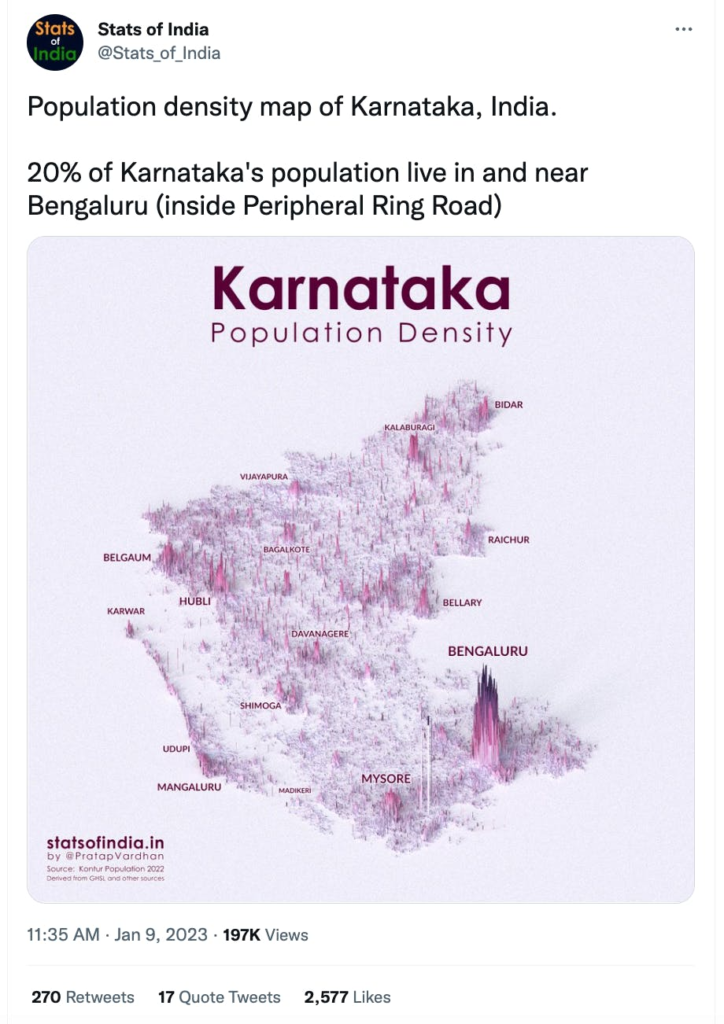

I just LOVE these remarkable map-charts by ‘Stats of India’ which shows the population of various Indian states by city/town:

The ‘Consulting Comedy’ Twitter handle is just hilarious 😀

💬 Quote of the week

“A computer would deserve to be called intelligent if it could deceive a human into believing that it was human.”

– Alan Turing

🍿 Movie/s of the week

a. Kevin Hart’s Guide to Black History (Netflix)

I came across this while browsing Netflix for something to watch with my 10-year old… and was pleasantly surprised.

Kevin Hart, the Hollywood star and stand-up comic has created a fun 1-hour sketch comedy take on the history of key Black American heroes. Heroes whose names go beyond the known ones of Martin Luther King and Harriet Tubman.

What is special is Kevin’s kid-friendly comic approach to telling their stories. The tales are educational, yet vastly entertaining. My son was guffawing at some of the silly jokes.

I’d love to see something like this made for lesser-known Indian heroes.

📺 Video/s of the week

a. Honest Trailers – RRR by Screen Junkies (5:39)

Honest Trailers is a brilliant concept where the creators make a revised, ‘honest’ trailer that does a hilarious take on what actually makes the movie worth watching. It is brutal in its takedown of any pompous or illogical elements of the movie; and yet manages to give credit where it’s due.

With RRR being the flavour of the season, this video is a superb take on what makes the movie special (and unintentionally hilarious).

As my friend Ananth said, watch out for that line about the in-laws 🙂

That’s it folks: my recommended reads, listens and views for the week.

Take care and stay safe.