The need to fix and not flee from critical problems

Welcome to the tenth edition of ‘3-2-1 by Story Rules‘.

A newsletter recommending good examples of storytelling across:

• 3 tweets

• 2 articles, and

• 1 long-form content piece

Each would be accompanied by my short summary/take and sometimes with an insightful extract.

Let’s dive in.

🐦 3 Tweets of the week

Just get started and you will get the momentum.

Just when you thought that you could take that expensive ‘Prompt Engineering’ course.

What you see depends on the perspective you take. (Turn your phone 90-degrees clockwise after switching off auto-rotate).

📄 2 Articles of the week

a, Quitters by Scott Galloway

Prof. Galloway makes an impassioned plea to all Americans (though the message is applicable to nations everywhere) to stay and reform broken societies rather than decrying them and looking to secede.

The government is gridlocked, parties polarized, teens depressed. There’s a lot wrong with America, and we have reason to be upset about it.

The question is: What do we do about it? For too many, the answer is quit: Instead of fixing the Fed, start a different currency. Instead of healing our divides, split the nation in two. Instead of making this planet more habitable, colonize other planets or put a headset on that takes you to a meta (better) universe. But here’s the thing: We’re stuck here, and with each other.

…

History’s greatest leaders aren’t quitters, but reformers. Abraham Lincoln felt it was his “duty to preserve the Union,” not to accept its division and cauterize the wound. Despite the headlines, and all the work to be done, our nation’s arc still bends toward bringing groups together. From Civil Rights to gay marriage, America still strives to bring people closer under the auspices of a shared belief in a union that offers liberty and the pursuit of happiness.

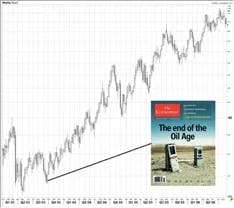

b. A somewhat empirical look at The Magazine Cover Indicator by Brent Donnelly

Magazine covers have a tendency to make bold pronouncements of ongoing trends or the future direction of key sectors (e.g. ‘The End of Oil’)

This article analyses several magazine covers by ‘The Economist’ and ‘Time’ in terms of what would happen if you invested based on the cover prediction. The answer: You’d lose money.

“Covers of The Economist and Time Magazine that point in a direction for a particular asset class are contrarian. They work as contrary indicators in both directions.

If you “go with” a cover of The Economist (long or short), you should expect to lose roughly 10% of your investment in the year after it hits the newsstands.”

🎤 1 long-form listen of the week

a. Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI

Sam Altman is perhaps one of the most important leaders on the planet right now. In this podcast episode, he comes across as thoughtful and open to changing his mind.

This example makes an interesting case for the continued relevance of the human factor, despite AI’s better ability at a task:

Altman: You know, when Kasparov lost to (IBM’s) Deep Blue, somebody said… that chess is over now. If an AI can beat the best human that chess, then no one’s going to bother to keep playing, right? Cause like, what’s the purpose of us or whatever. That was 30 years ago, 25 years ago…

(But) I believe that chess has never been more popular than it is right now. And people keep wanting to play and wanting to watch. And by the way, we don’t watch two AIs play each other, which would be a far better game in some sense than whatever else. But that’s, that’s not what we choose to do. Like we are somehow much more interested in what humans do in this sense…

And yes, Altman does acknowledge the potential dangers of a super-intelligent AI (emphasis mine):

Lex Fridman: … there’s some folks who consider all the different problems with a super intelligent AI system. So one of them is Eliezer Yatkovsky. He warns that AI will likely kill all humans. And there’s a bunch of different cases, but I think one way to summarize it is that it’s almost impossible to keep AI aligned (to human interests) as it becomes super intelligent. Can you steel man the case for that? And to what degree do you disagree with that trajectory?

Sam Altman: So first of all, I’ll say, I think that there’s some chance of that. And it’s really important to acknowledge it because if we don’t talk about it, if we don’t treat it as potentially real, we won’t put enough effort into solving it. And I think we do have to discover new techniques to be able to solve it. I think a lot of the predictions, this is true for any new field, but a lot of the predictions about AI in terms of capabilities, in terms of what the safety challenges and the easy parts are going to be have turned out to be wrong. The only way I know how to solve a problem like this is iterating our way through it, learning early and limiting the number of one shot to get it right scenarios that we have.

That’s all from this week’s edition.